Introduction

We describe using the Gregory-Loredo

algorithm (Gregory & Loredo 1992) to detect temporal

variability in sources identified in the CXC L3 pipeline (Evans

et al. 2006), based on the event files. Briefly, N events are

binned in histograms of m bins, where m runs from 2 to mmax.

The algorithm is based on the likelihood of the observed

distribution n1 n2, ..., nm occurring. Out of a total number of

mN possible distributions

the multiplicity of this particular one is N!/(n1! . n2! . ... . nm!). The ratio of this multiplicity to

the total number provides the probability that this

distribution came about by chance. Hence the inverse is a

measure of the significance of the distribution. In this way

we calculate an odds ratio for m

bins versus a flat light curve. The odds are summed over all

values of m to determine the odds that the source

is time-variable.

The method works very well on event data and is capable to

deal with data gaps. We

have added the capability to take into account temporal

variations in effective area. As a byproduct, it delivers a

light curve with optimal resolution.

Although the algorithm was developed for detecting periodic

signals, it is a perfectly suitable method for detecting plain

variability by forcing the period to the length of the

observation.

We have implemented the G-L algorithm as a standard C program,

operating on simple ASCII files for ease of

experimentation. Input data consist of a list of event times

and, optionally, good time intervals with, optionally,

normalized effective area.

Two output files are created: odds

ratios as a function of m

and a light curve file.

If mmax is not explicitly specified, the

algorithm is run twice. The first time all values of

m are used, up to the minimum of 3000

and (te - tb) /50; i.e., variability is considered

for all time scales down to 50s, which is about 15 times the

most common ACIS frame time. The sum of odds S(m) = ∑ O(i) / (i - mmin + 1), where i = mmin ... m, is calculated as a function of

m and its maximum is determined. Then

the algorithm is run again with mmax set to the highest value of

m for which S(m) > max(S) / √e. In addition to the total odds

ratio O the corresponding probability

P of a variable signal is

calculated.

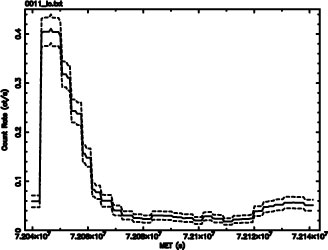

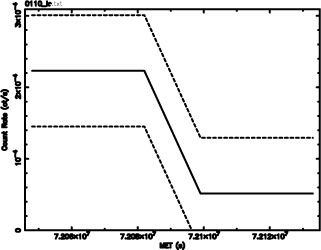

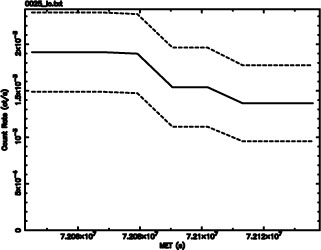

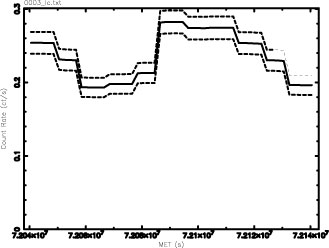

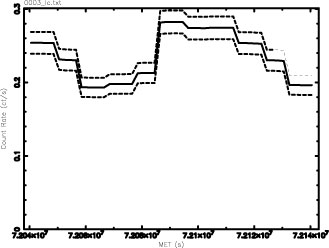

The

light curve that is generated by the program essentially

consists of the binnings weighed by their odds ratios and

represents the most optimal resolution for the curve. The

standard deviation σ is provided for each point of the

curve.

There is

an ambiguous range of probabilities: 0.5 < P < 0.9, and in particular the range between

0.5 and 0.67 (above 0.9 all is variable, below 0.5 all is

non-variable). For this range we have developed a secondary

criterion, based on the light curve, its average

σ, and the average count rate. We calculate the fractions

f3 and f5 of the light curve that are within

3σ and 5σ, respectively, of the average count rate. If

f3 > 0.997 AND f5 = 1.0 for cases in the ambiguous

probability range, the source is deemed to be

non-variable.

|

Variability

Index

|

Condition

|

Comment

|

|

0

|

0 < P <

0.5

|

Definitely not

variable

|

|

1

|

0.5 < P < 0.67

and f3 > 0.997 and f5 = 1.0 |

Not considered

variable

|

|

2

|

0.67 < P <

0.9

and f3 > 0.997 and f5 = 1.0 |

Probably not

variable

|

|

3

|

0.5 < P <

0.6

|

May be

variable

|

|

4

|

0.6 < P <

0.67

|

Likely to be

variable

|

|

5

|

0.67 < P <

0.9

|

Considered

variable

|

|

6

|

0.9 < P and O <

2.0

|

Considered

variable

|

|

7

|

2 < O < 4

|

Considered

variable

|

|

8

|

4 < O <

10

|

Considered

variable

|

|

9

|

10 < O <

30

|

Considered

variable

|

|

10

|

30 < O

|

Considered

variable

|

|

Odds ratio range

|

Probability range

|

Good

detections |

False

detections |

Missed with secondary criterion

|

False with secondary criterion

|

|

1.0 - 2.0

|

0.5 - 0.67

|

2

|

7

|

1

|

0

|

|

2.0 - 9.0

|

0.67 - 0.9

|

5

|

10

|

2

|

0

|

|

> 9.0

|

> 0.9

|

47

|

0

|

0

|

0

|